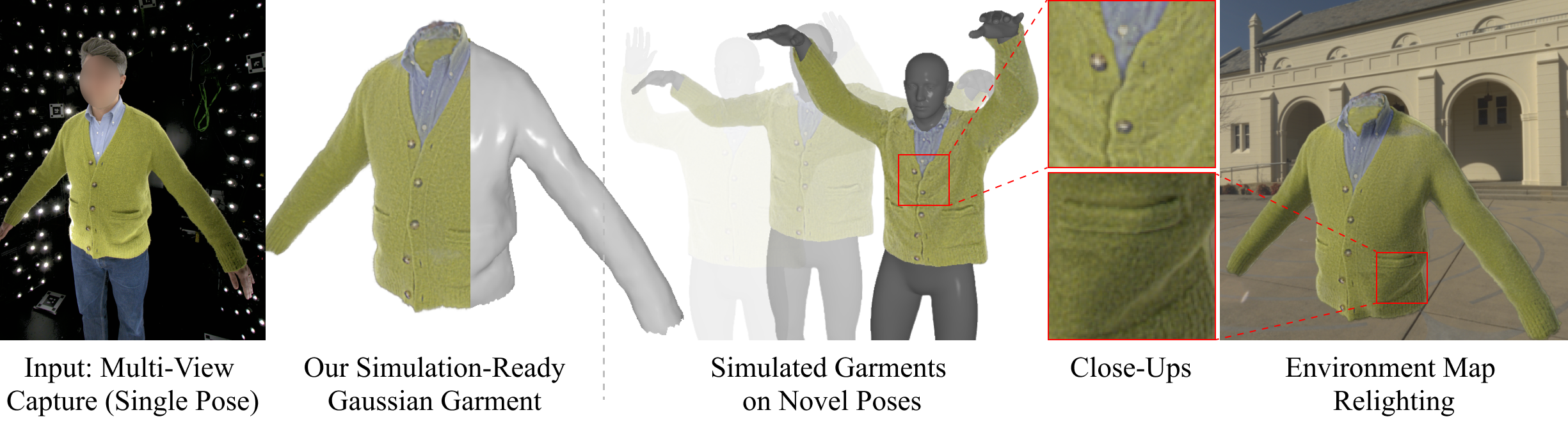

We introduce a novel approach to reconstruct simulation-ready garments with intricate appearance.

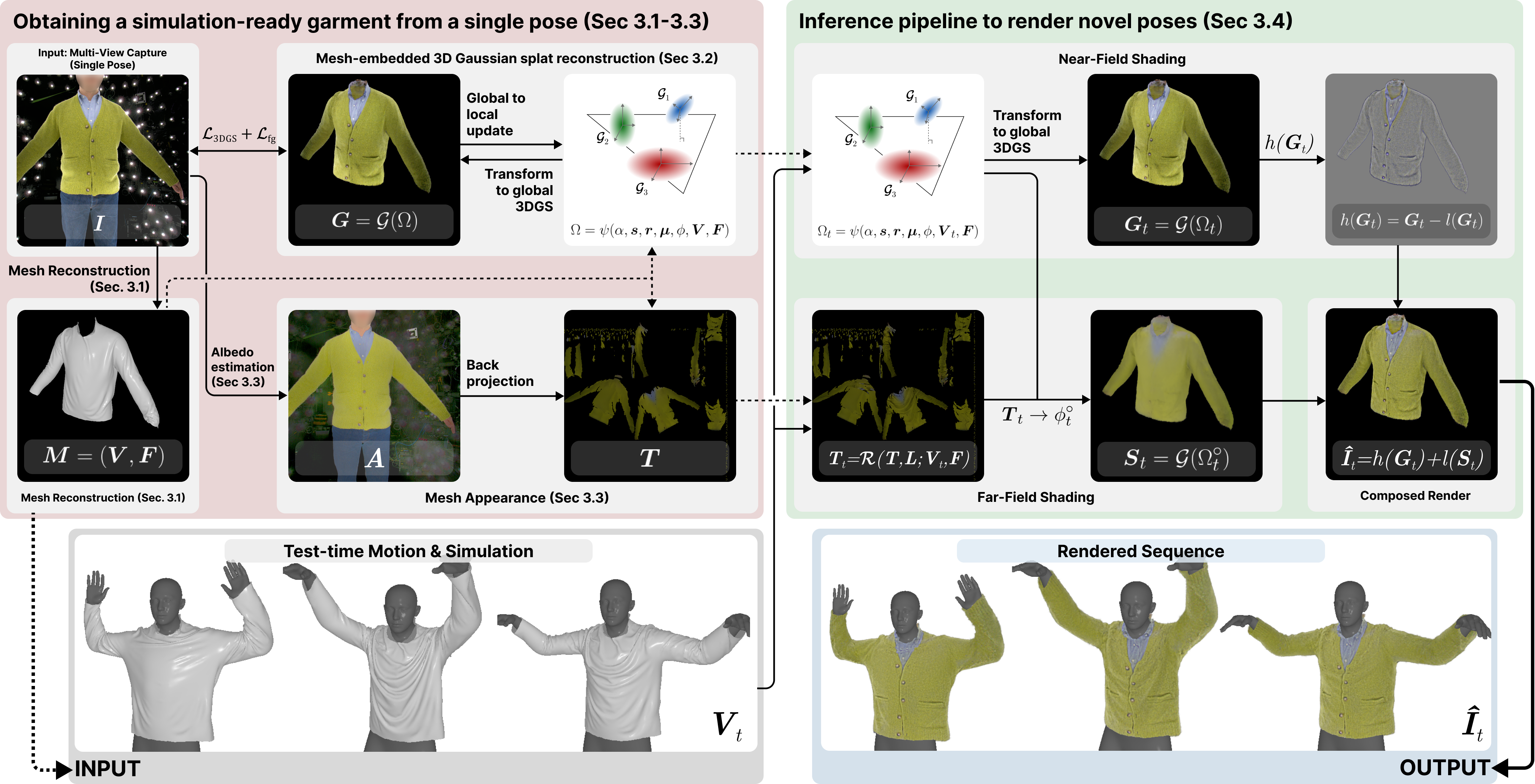

Despite recent advancements, existing methods often struggle to balance the need for accurate garment reconstruction with the ability to generalize to new poses and body shapes or require large amounts of data to achieve this. In contrast, our method only requires a multi-view capture of a single static frame. We represent garments as hybrid mesh-embedded 3D Gaussian splats (or simply Gaussians), where the Gaussians capture near-field shading and high-frequency details, while the mesh encodes far-field albedo and optimized reflectance parameters.

We achieve novel pose generalization by exploiting the mesh from our hybrid approach, enabling physics-based simulation and surface rendering techniques, while also capturing fine details with Gaussians that accurately reconstruct garment details. Our optimized garments can be used for simulating garments on novel poses, and garment relighting.

Given a multi-view capture of a clothed human in a single pose, we first extract the garment mesh and fit a 3DGS representation with mesh-embedded Gaussian splats. Simultaneously, we fit an albedo map of the ground truth image and back-project onto the mesh to generate a textured mesh. At inference, the mesh is shaded with a physically-based shading model and the resulting mesh colors are then transferred to zero-order spherical harmonics on the pre-optimized splats. Finally, we combine the high-pass of the original Gaussian splat reconstruction with the low-pass of the traditionally shaded result to produce the final render.

@article{guo2025garments,

author = {Guo, Michelle and Chiang, Matt Jen-Yuan and Santesteban, Igor and Sarafianos, Nikolaos and Chen, Hsiao-yu and Halimi, Oshri and Bozic, Aljaz and Saito, Shunsuke and Wu, Jiajun and Liu, C. Karen and Stuyck, Tuur and Larionov, Egor},

title = {Photorealistic Simulation-Ready Garments from a Single Pose},

journal = {arXiv},

year = {2025},

}